The UX of analyst focused business intelligence — Looker, Tableau, PowerBI, Sigma, etc. — was never designed for an AI augmented experience like Cursor or GitHub Copilot. So before we can consider what LLMs and agents can do for analysts, we need to rethink the experience.

Code to BI

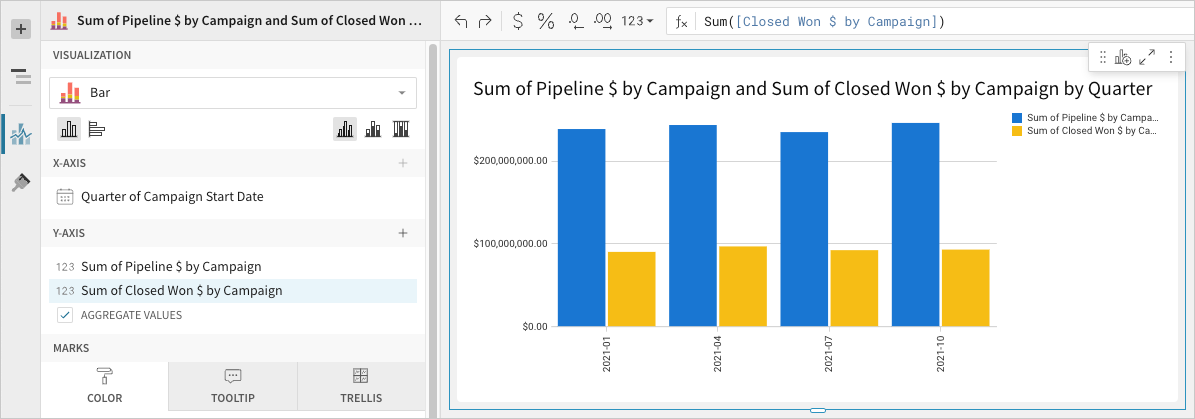

Where code is the entire story and narrative, a chart snapshots only a moment in time, your last thought. While you can scroll through code to understand why the current line does what it does, and read comments for the context of intent and assumptions; you can’t in modern BI.

The most you can do in your current visualization is undo to see past states in isolation. For the rest, only the mic drop conclusion of the story is ever saved. A visualization becomes just the final episode, spoiler alert, Daenerys is Jon Snow’s aunt. The 8 seasons of misdirection — of exploratory analytics and hypothesis testing is all missing.

The “code” of BI, the story and narrative, is the missing piece for training and inference. Cursor’s code completion and chat would be ineffectual if it didn’t know what led up to the current line of code (left) or wasn’t able to draw upon the rest of your repository for context (right).

The narrative inherent to code allowed it to naturally scale to AI experiences, but this is not the case for BI. The current UX lacks the ability to recall prior steps; branch and combine different exploration paths to construct a conclusion. Notebooks (such as jupyter, collab, or hex) have filled this need for data scientists, allowing them to “show their work” and remember the different facets that need to be brought together in the end, with existing coding assistants being a natural add on.

However, the code of notebooks is also the reason many analysts and business users prefer traditional BI. How would the UX for BI need to change to fully harness an AI assistant?

Building with Memories

We solve the missing narrative with memories in the HyperGraph which becomes the foundation of suggestions, NLQ, and agentic discovery. Every exploration of your entire team is stored as a branching mind map (as opposed to sequential cells in a notebook) to fully encode the thought process of an analyst.

But the HyperGraph is not just a static readonly artifact of what has happened, it’s the interface for non-linear AI assisted exploration through branching and merging of the memories in the graph. Simply drag new edges out of existing memories to extend analysis and select multiple memories to combine for conclusions.

These interactions use the walks in the graph and similar memories across all assets in your organization to predict what comes next; much like how Cursor uses preceding lines of code and files across your repo to complete a task.

In this example we extend a single memory; first to segment by age cohorts, and then aggregate by user score. Both the cohorts and the user score formula were recalled from past memories, specific to your organization, through our learned metrics layer. Finally, we bring these parallel paths back together to understand user scores across product and age segments — using only short prompts.

However, since we’re able to use the walk of the current memory to search across HyperGraphs, our suggestions are often accurate without explicit prompts similar to tab auto-complete. By just grouping by age, we’re able to suggest age cohorts; and by adding the individual components of the user score, we’re able to suggested the combined formula as the next step. Combining these paths we arrive at the same analysis.

Either through prompts or familiar shelves and pills, the HyperGraph and memories enables a multi-faceted AI augmented exploration not possible in traditional BI.

Beyond Single Memories

Extending, combining, and taking suggestions allow us to further the exploration one step at a time; using these as primitives for an AI to decide which one to take with each additional query — grounded by past memories — enables agentic exploration.

Given a task from a starting memory, we will first take time to think about the problem (ala OpenAI o1) through planning leveraging related memories. With a plan, you can pick one of several agents (marketplace on the way) to execute the analysis through chain-of-thought reasoning. Through incremental queries, we again leverage the path of memories to produce a cohesive next query.

Here we extend our Thanksgiving day Turkey analysis to those with a couple more legs to find that the “total weight of 2-legged animals shows a clear increasing trend from 2001 to 2023, indicating a shift towards higher consumption of poultry over the years.”

With memories in the HyperGraph, we’re able to leverage AI to augment exploration one step at a time or automate entire tasks. We’re excited to see where else this takes us.

Try it out and let us know what you think!